worn versions of it. It comprises a headworn or neck-worn pendant that contains both a data projector and camera. Headworn versions were built at MIT Media Lab in 1997 (by Mann) that combined cameras and illumination systems for interactive photographic art, and also included gesture recognition (e.g. finger-tracking using colored tape on the fingers).

worn versions of it. It comprises a headworn or neck-worn pendant that contains both a data projector and camera. Headworn versions were built at MIT Media Lab in 1997 (by Mann) that combined cameras and illumination systems for interactive photographic art, and also included gesture recognition (e.g. finger-tracking using colored tape on the fingers).Mann referred to this wearable computing technology as affording a “Synthetic Synesthesia of the Sixth Sense”, believing that wearable computing and digital information could act in addition to the five traditional sense.Ten years later, Pattie Maes, also with MIT Media Lab, used the term “Sixth Sense” in this same context, in a TED talk.

https://www.ted.com/talks/pranav_mistry_the_thrilling_potential_of_sixthsense_technology?language=en

Subsequently, other inventors have used the term sixth-sense technology to describe new capabilities that augment the traditional five human senses. For example, in their 2012-13 patent applications, timo platt et als, refer to their new communications invention as creating a new social and personal sense, i.e., a “metaphorical sixth sense”, enabling users (while retaining their privacy and anonymity) to sense and share the “stories” and other attributes and information of those around them.

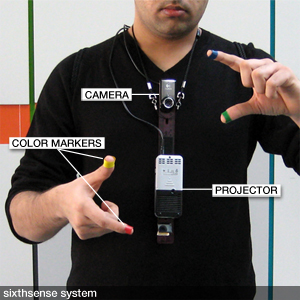

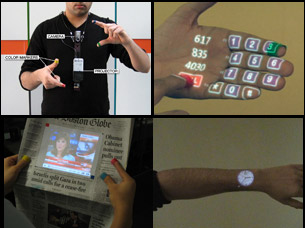

The SixthSense technology contains a pocket projector, and a camera contained in a head-mounted, handheld or pendant-like, wearable device. Both the projector and the camera are connected to a mobile computing device in the user’s pocket. The projector projects visual information enabling surfaces, walls and physical objects around us to be used as interfaces; while the camera recognizes and tracks users’ hand gestures and physical objects using computer-vision based techniques. The software program processes the video stream data captured by the camera and tracks the locations of the colored markers (visual tracking fiducials) at the tips of the user’s fingers. The movements and arrangements of these fiducials are interpreted into gestures that act as interaction instructions for the projected application interfaces. SixthSense supports multi-touch and multi-user interaction.

Mann has described how the SixthSense apparatus can allow a body-worn computer to recognise gestures. If the user attaches colored tape to his or her fingertips, of a color distinct from the background, the software can track the position of those fingers.

Get an overreview of this technology explored by the developer pranav mistry who is the current lead of this project.

https://www.ted.com/talks/pranav_mistry_the_thrilling_potential_of_sixthsense_technology?language=en